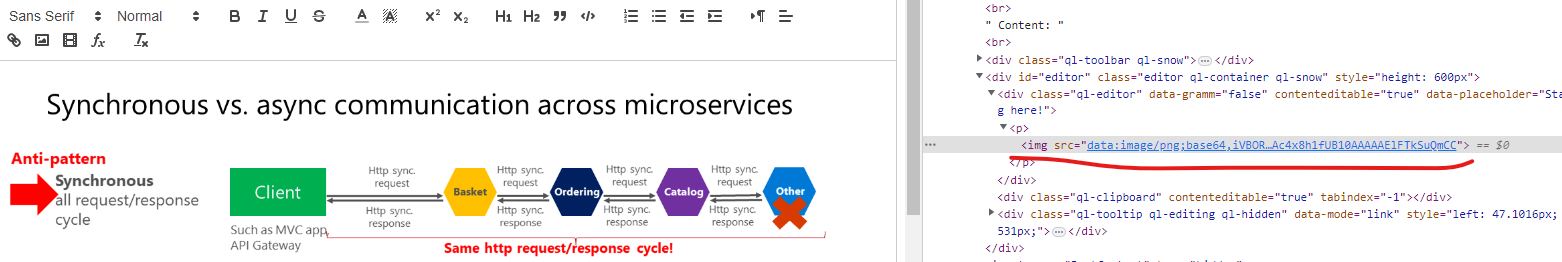

Many of us know what is a WYSIWYG editor. It's a JavaScript rich text editor where you can write stuff with various color and formats and it gets encoded into HTML which you can store. Later you just fetch that HTML data and decode that into your page. There are many WYSIWYG editor which are free and you can explore. My favorite is Quill.js. It's a very light and simple tool. However, in every WYSIWYG editor you can add images to make your content beautiful. By default these images are converted to a base64 string and then added in the src attribute. For example,

Now think, you have 10 images in your article and each image is around 1 Mb. One more thing, base64 increases your data to 30%. So what will be actual size of your article now? This data is getting inserted into your database and it's consuming too much database storage. Pretty crazy huh? But there are solutions also. I'll tell you about two:

- Frontend Approach: These WYSIWYG provides feature to create customizable plugins. So you can create an API to upload your file then grab that link and set in src attribute. Simple! but I find an issue there. Let's say if I update my article too much with images then each time a new image is going to be uploaded. That'll raise my application/file storage with unnecessary images.

- Backend Approach: So, when I'm done writing my content and saving the content finally our backend system will scrap all the base64 images from the HTML data and upload them to my server. After that replace those base64 image data with the new image link. Let me share that story with you today.

Backend Approach

For scraping HTML data there's a beautiful package in NuGet called Html Agility Pack. You can install the package through Nuget Package Manger Console:

Install-Package HtmlAgilityPack

Let's explore the code first:

- Firstly we are getting the raw html code with which includes the base64 images.

- After that we are listing these img nodes.

- For each of the nodes we are picking the base64 from the source, converting them to a memory stream and tagging a GUID so that later we can replace these ids with image URLs.

- When we are done doing step 3 for every nodes we'll have an ImgScapperResult class as a response which includes our memory stream data and the raw content (where the image urls will be replaced).

public static class ImgScapper

{

public static ImgScapperResult ScapImages(string rawContent)

{

var imgReferences = new Dictionary();

var htmlDoc = new HtmlDocument();

htmlDoc.LoadHtml(rawContent);

var images = htmlDoc.DocumentNode.SelectNodes("//img");

if (images != null )

{

foreach (var image in images)

{

var imageSrcValue = image.Attributes["src"].Value;

var imageStreamInfo = GetImageFromBase64(imageSrcValue);

if (imageStreamInfo != null && imageStreamInfo.Data.Length > 0)

{

string imgRefId = $"{Guid.NewGuid()}.{imageStreamInfo.Type}";

imgReferences.Add(imgRefId, new MemoryStream(imageStreamInfo.Data));

image.SetAttributeValue("src", imgRefId);

}

}

return new ImgScapperResult()

{

Content = htmlDoc.DocumentNode.OuterHtml,

ImgReferences = imgReferences

};

}

else

{

return new ImgScapperResult() {

Content = rawContent,

ImgReferences = new Dictionary()

};

}

}

private static ImageStreamInfo GetImageFromBase64(string base64StringContent)

{

try

{

string base64Data = Regex.Match(base64StringContent, "data:image/(?.+?),(?.+)").Groups["data"].Value;

return new ImageStreamInfo()

{

Type = GetBase64FileExtension(base64Data),

Data = Convert.FromBase64String(base64Data)

};

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

return new ImageStreamInfo() { Data = new byte[0] };

}

}

private static string GetBase64FileExtension(string base64String)

{

switch (base64String[0])

{

case '/':

return "jpeg";

case 'i':

return "png";

case 'R':

return "gif";

case 'U':

return "webp";

case 'J':

return "pdf";

default:

return "unknown";

}

}

}

public class ImgScapperResult

{

public string Content { get; set; } = default!;

public Dictionary ImgReferences { get; set; } = new Dictionary();

}

public class ImageStreamInfo

{

public string Type { get; set; } = default!;

public byte[] Data { get; set; } = default!;

}

So finally, we have our scrapper result and now we are gonna upload our images and finally format our html data. Here's how we have done that:

string article = "your-raw-html-data";

var imgScapperResult = ImgScapper.ScapImages(article);

if (imgScapperResult != null && imgScapperResult.ImgReferences.Any())

{

foreach (var imageId in imgScapperResult.ImgReferences.Keys)

{

var fileName = $"{imageId}";

var data = imgScapperResult.ImgReferences[imageId];

await _fileUploadService.UploadFileAsync(fileName, data);

string imageUrl = $"{_fileServiceUrl}/{fileName}";

imgScapperResult.Content = imgScapperResult.Content.Replace(imageId, imageUrl);

}

article = imgScapperResult.Content;

}

That's it! Here's how I have solved the issue from the backend. I'm not quite sure whether it's a good approach or not but it was quite fun to do that stuff :D Let me know about your thoughts on this too!